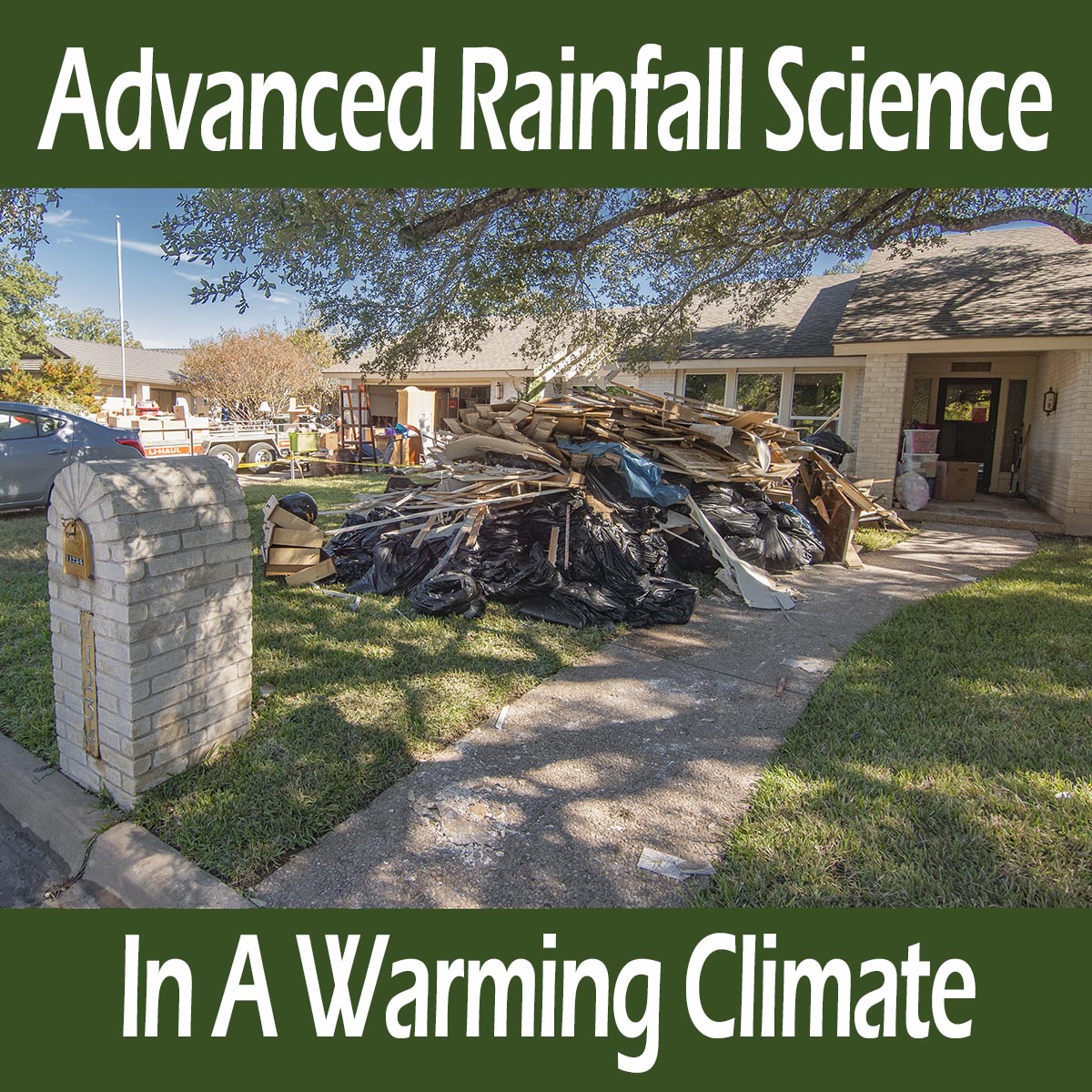

Record flooding on Onion Creek, Austin, October 2013

Record flooding on Onion Creek, Austin, October 2013

Hydrology is one of our advanced culture’s most important engineering design areas because so much of our lives depend on not being flooded out, or having our streets flood or roofs collapse from excess rainfall. Hydrology is the study of complex water system of the Earth. This field has played a large role in my other career as a land development engineer. Because I have been designing projects using hydrologic concepts and data for 40 years, I have paid a lot of attention to new engineering criteria that takes climate change into consideration and I have delved deeply into climate change hydrology and the National Oceanic and Atmospheric Administration’s (NOAA) Atlas 14. This is an evaluation of rainfall intensity, duration and frequency (IDF – how hard it rains, how much it rains and how often it does both). Atlas 14 for Texas was released in 2018.

This IDF evaluation is an update to their previous hydrologic engineering design criteria for Texas that tells us engineers how much rainfall we can expect when designing our streets, storm sewers, bridges, and buildings, and where flood plains are located so we can keep other land development safe from flooding as well. The worst case design scenario is usually the 100-year, 24-hour storm, but we also look at the 1-, 2-, 5-, 10-, and 25- year storms for different designs of different things, as well as a few criteria for “mother of all storms.” Dams are in this category where we use either the possibly maximum precipitation (PMP) or the 1/2 PMP depending on the dam. The 100-year storm is technically defined as the the 1% probability of occurrence in any year for the 100-year event, or the 10 percent chance for the 10-year storm and so on. In plain English, the 100-year storm occurs once every hundred years, on average over very long time periods. As with any average, this does not mean we only get 1, 100-year storm every 100 years. We might get more we might get less, it’s an average.

The last time NOAA evaluated rainfall intensity in Texas was with two studies in 1961 and 1964 so it was expected that climate warming would increase rainfall rates with Atlas 14 and that it did. In a wide swath along the Gulf of Mexico that extends 200 miles inland to Austin and parts of the Hill Country, the old 100-year storm was evaluated to be the new 25-year storm. That is, the old 100-year storm would happen four times as often, on average over long time periods. The challenge with Atlas 14 is that it is understated because of the way the statistical evaluations used behave with new and changing trends. The increasing rainfall we have all been perceiving in many areas is quite real. Rainfall is increasing because warmer air holds more moisture.

Atlas 14 is understated because NOAA sort of made a rookie mistake by using the standard (frequentist) statistics they have been using for 60 years to evaluate IDF but, this is all new, to all of us. Climate change has never happened to us before so we do not know how to behave. It doesn’t take long for those scientists specializing in this sort of evaluation to make waves when they find out about this sort of understatement, and it has been a choppy time for a few years now.

This understating problem is common in our warming world where historic data is used to develop engineering criteria for myriad things other than flooding such as agriculture, industrial and social applications that require average or normal conditions in the outside world to be considered. It is called the non-stationarity problem. With hydrology, where the rainfall records used to perform these IDF evaluations are changing because of warming; the rainfall records are not stationary. For these simple statistical analyses to be valid for normal occurrences in our outside world, data cannot be changing, they must be stationary. NOAA is aware of this non-stationarity problem and has plans for their next rainfall evaluation to use advanced statistical technique better adapted to a changing climate.

The physics behind increasing rainfall with climate warming is that atmospheric moisture increases 7% per degree C warming. Simply put, with 1 C warming rainfall should increase by 7%. Our climate is anything but simple however. Hurricane Harvey produced 15% more rainfall than the same hurricane modeled without climate change (van Oldenborgh 2017). New science says the 7% direct physical increase in moisture is relative to run of the mill storms, where the most extreme and least extreme storms plus the tropics see about a 14% increase on average with a maximum of 25% (Villalobos and Neelin 2023). The reason there is more than a 7% increase is dynamics. Take Hurricane Harvey for instance, or any hurricane. Moist rising air in a hurricane condenses into clouds and gives off heat, making the rising air rise faster. This feeds back into the storm by pulling more moist air into the rising air column (convection), that then creates more heat when it condenses into clouds and so on. This is a rainfall dynamic. Other dynamically changing things also make other storms create more rainfall than the 7% standard physics prescribes.

The First Street Foundation has come out with a new evaluation of historic rainfall data that overcomes some of the shortfalling of NOAA’s non-stationary problems with Atlas 14 from thier old stable climate evaluations. First Street is a nonprofit dedicated to interpreting complicated science and providing normal people with counsel on risk and safety. The work they are doing on rainfall IDF is great, better than NOAA’s, but it is still understated.

To address the non-stationarity problem, First Street doesn’t use all the old stable climate data that NOAA uses. That data just does not represent our current climate any longer. The problem though, that makes First Street’s evaluation understated too, is that our current climate is not stationary either. Rainfall continues to become more intense as we warm so even the most recent data are biased low. Not only that, but there is another problem with non-stationarity that remains lurking in the shadows. It takes time for the 10-year storm to appear in rain gauges for example. If our climate has only just recently changed in the last five or ten years, the 10-year storm may or may not have occurred, much less the 25-, 50-, or 100-year storms. We do not know what amount of rain, in how much time, these storms will create; and this is if our climate were to magically stabilize or become stationary. As we warm further, the target moves with the warming. Tomorrow’s magically stationary climate will be different from today’s, with more extreme rainfall than today.

To further address these non-stationarity problems, First Street’s applied a “correction estimate” to the newer data based on the mid-point for the last 20 years of rainfall data centered on 2011 and used one of the latest climate models (CMIP6) Coupled Model Intercomparison Project (a model that integrates ocean and land processes together) to estimate future climate change conditions but they did not state the future climate scenario assumed. This makes a difference. If future warming is assumed to be greater, rainfall will be greater of course. We do not know what future warming scenario First Street used. The estimate they used increases future rainfall by some amount, but because of missing information in their evaluation report, the amount of the increase is not clear. This could be trouble as the amount is likely based on the CMIP6, which like all computer modeling of extremes, is understated. (see references)

Future climate modeling of extremes is significantly more tricky than modeling future average with further warming. Averages are easy. Simply run the model a couple dozen times and average the results together. This kind of modeling has performed well, so far. But extremes are another challenge altogether. The reason is feedbacks and our old friend, dynamics. There are so many feedbacks and we are just beginning to understand many of them. They tend to amplify dynamics rather than diminish them because of one of the fundamentals of the physics of energy: warming creates nonlinearly more energy in the environment, so most feedbacks that occur will be greater because of the nonlinear relationship between warming and energy. (enthalpy of moist air)

Understating acknowledgement in First Street’s work… First Street’s authors acknowledge their method is understating, “Given the limitation of the 20-year length of the historical data time series, the First Street Foundation analysis still has the issue that the data themselves do not fully resolve the current year’s extreme precipitation, and must rely on extreme value theory and mathematical techniques to estimate precipitation at higher return periods.” Another explanation missing in their report is the “extreme value theory and mathematical techniques to estimate precipitation at higher return periods,” where higher return periods represents events that are more rare than would have happened on average since our climate changed away from its natural variation five or ten years ago.

In plain language, First Street guesses at an increasing trend based on the last 20 years of rainfall data that includes a fair amount of data from our old climate, plus results from CMIP6 that has known understating issues, plus they do not reveal the future climate change scenario used in CMIP6.

All in all though, like the Texas State Climatologist John Nielsen-Gammon related to me when we were discussing non-stationarity with Texas’s Atlas 14 update in 2018, he said it was better than nothing. First Street’s new estimate goes one better than Atlas 14, even though large unknowns remain and it is quite likely that First Street remains understated.

Looking at First Street’s results… Their evaluation show changes from the previous NOAA IDF evaluation results across the country. These results are mostly increasing with some areas staying the same and some decreasing. This is pretty much what the models say, though models are really poor on quantities. The greatest increases are seen in the Northeast and Gulf Coast areas with a focus on Texas. The increases are based on NOAA’s IDF evaluations that were done at various times starting in 1961 and 1964 for short and long duration rain events for the contiguous 48 states. Then in 1973 NOAA began publishing work on Atlas 2 for the six northwestern states, then in 1986 they produced further work on these six states. In 1973 NOAA began work on the Atlas 14 project with 11 publications for various combinations of all contiguous 48 states except the six northwestern states, ending with Texas (2018) and the Northeast (2019), where the six northwestern states have yet to be updated.

The changes from current NOAA evaluations to First Street’s work must be viewed with the base period considered. The earlier the base period, the greater the plausible change. NOAA’s work up to the beginnings of the Atlas 14 project showed little if any changes from the natural variation of our old climate because warming prior to this time remained within the boundaries of our old climate. As time progressed in the 21st century, more warming related increases in precipitation were incorporated into NOAA’s work, that remained understated from the non-stationarity problem, with the most increases in rainfall being recent.

First Street’s specific results for Austin and Houston, Texas… Their evaluation shows a zero to 40% increase (Austin region) over Atlas 14 and a greater than 50% increase (Houston region). Cheng and AghaKouchak 2014 show that the non-stationarity understatement can be as much as 60 percent. An independent evaluation of Atlas 14 over NOAA’s previous rainfall analysis in Leander, Texas (a suburb region NW of Austin) shows flood volume increased by 50 percent because of the understating rainfall from Atlas 14 increases alone.

The bottom line is that flood and stormwater runoff engineering should include additional large safety factors for fail-safe flood protection, not only for Atlas 14, but for First Street’s evaluation as well. These safety factors should consider First Street’s work relative to when NOAA’s previous rainfall evaluations were performed, and include an added safety factor for understated CMIP6 modelling of future conditions that is dependent upon the life of the project being designed.

~ ~ ~

REFERENCES

Austin and Houston, NOAA’s Atlas 14 says the 100-year storm is now the 25-year storm… “The study, published as NOAA Atlas 14, Volume 11 Precipitation-Frequency Atlas of the United States, Texas, found increased values in parts of Texas, including larger cities such as Austin and Houston, that will result in changes to the rainfall amounts that define 100-year events, which are those that on average occur every 100 years or have a one percent chance of happening in any given year. In Austin, for example, 100-year rainfall amounts for 24 hours increased as much as three inches up to 13 inches. 100-year estimates around Houston increased from 13 inches to 18 inches and values previously classified as 100-year events are now much more frequent 25-year events.”

NOAA updates Texas rainfall frequency values, National oceanic and Atmospheric Administration, September 27, 2018.

https://www.noaa.gov/media-release/noaa-updates-texas-rainfall-frequency-values

Hurricane Harvey, 2017 was made three times more likely because of climate change… ” During August 25–30, 2017, Hurricane Harvey stalled over Texas and caused extreme precipitation, particularly over Houston and the surrounding area on August 26–28. This resulted in extensive flooding with over 80 fatalities and large economic costs. It was an extremely rare event: the return period of the highest observed three-day precipitation amount, 1043.4 mm 3dy−1 at Baytown, is more than 9000 years (97.5% one-sided confidence interval) and return periods exceeded 1000 yr (750 mm 3dy−1) over a large area in the current climate. Observations since 1880 over the region show a clear positive trend in the intensity of extreme precipitation of between 12% and 22%, roughly two times the increase of the moisture holding capacity of the atmosphere expected for 1°C warming according to the Clausius–Clapeyron (CC) relation. This would indicate that the moisture flux was increased by both the moisture content and stronger winds or updrafts driven by the heat of condensation of the moisture… Our scientific analysis found that human-caused climate change made the record rainfall that fell over Houston during Hurricane Harvey roughly three times more likely and 15 percent more intense.”

van Oldenborgh et al., Attribution of extreme rainfall from Hurricane Harvey, August 2017, Environmental Research Letters, December 13, 2017.

https://iopscience.iop.org/article/10.1088/1748-9326/aa9ef2/pdf

BEGIN NON-STATIONARITY REFERENCES

Climate Normals and Stationarity – The non-stationarity problem with frequentist statistics… Our National Weather Service climate normals assume a stationary data set or a climate that is not changing; “U.S. Climate Normals – Used by meteorological organizations around the world for analyzing climate conditions, climate normals are average values of temperature and precipitation over a period of time, typically 30 years, and are updated approximately every 10 years. The approach assumes a stationary climate and came into use about 75 years ago by the World Meteorological Organization (WMO), which sets standards of methodology.” Accessed December 2021.

https://www.ncei.noaa.gov/news/accounting-natural-variability-our-changing-climate

Frequentists Statistics are a problem with extreme event attribution caused by climate change… “The treatment of uncertainty in climate-change science is dominated by the far-reaching influence of the ‘frequentist’ tradition in statistics, which interprets uncertainty in terms of sampling statistics and emphasizes p-values and statistical significance. This is the normative standard in the journals where most climate-change science is published. Yet a sampling distribution is not always meaningful (there is only one planet Earth). Moreover, scientific statements about climate change are hypotheses, and the frequentist tradition has no way of expressing the uncertainty of a hypothesis. As a result, in climate-change science, there is generally a disconnect between physical reasoning and statistical practice.” … ” Extreme Events… A frequency is at best a useful mathematical model of unexplained variability. The analogy that is often made of increased risk from climate change is that of loaded dice. But if a die turns up 6, whether loaded or unloaded, it is still a 6. On the other hand, if an extreme temperature threshold is exceeded only very rarely in pre-industrial climate vs quite often in present day climate, the nature of these exceedances will be different. One is in the extreme tail of the distribution, and the other is not, so they correspond to very different meteorological situations and will be associated with very different temporal persistence, correlation with other fields, and so on… The fact is that climate change changes everything; the scientific question is not whether, but how and by how much… The scientific challenge here is that for pretty much any relevant dynamical conditioning factor for extreme events, there is very little confidence in how it will change under climate change (Shepherd 2019). This lack of strong prior knowledge arises from a combination of small signal-to-noise ratio in observations, inconsistent projections from climate models, and the lack of any consensus theory. If one insists on a frequentist interpretation of this second factor, as in PEA, then this can easily lead to inconclusive results, and that is indeed what tends to happen for extreme events that are not closely tied to global-mean warming (NAS 2016).”

Shepherd, Bringing physical reasoning into statistical practice in climate-change science, Climatic Change, November 1, 2021.

https://link.springer.com/content/pdf/10.1007/s10584-021-03226-6.pdf

Non-stationarity of Data – Problem Statement… Problem Statement #1, page 2, “Ignoring time-variant (non-stationary) behavior of extremes could potentially lead to underestimating extremes and failure of infrastructures and considerable damage to human life and society.”

Cheng, Frameworks for Univariate and Multivariate Non-Stationary Analysis of Climatic Extremes. Disertation, Univ Cal Irvine, 2014.

https://escholarship.org/content/qt16x3s2cp/qt16x3s2cp_noSplash_df70523d588ef903df391e21a1bfd201.pdf

Non-stationarity of Data Problem – General Discussion

Statistical analysis assumes data do not include a changing trend, or that the data are stationary. This allows evaluation of the past to predict the future, if boundary conditions in the past are the same as in the future. If boundary conditions change, robust statistical evaluation is not possible unless the data are assumed to be stationary. This is a catch-22, or a dilemma or difficult circumstance from which there is no escape because of mutually conflicting or dependent conditions. (Oxford) This problem is particularly troublesome with climate change data that have an increasing nonlinear trend, where for example, rainfall is increasing nonlinearly with warming. Non-stationarity can be dealt with to some extent using sophisticated analysis, but if a data evaluation states “the data are assumed to be stationary,” sophisticated statistics were not used and the results of the analysis cannot be relied upon. “In the most intuitive sense, stationarity means that the statistical properties of a process generating a time series do not change over time. It does not mean that the series does not change over time, just that the way it changes does not itself change over time.”

Palachy, Stationarity in time series analysis – A review of the concept and types of stationarity, Towards Data Science (independent forum)

https://towardsdatascience.com/stationarity-in-time-series-analysis-90c94f27322

Non-stationarity can result in significant understatement… “The World Meteorological Organization (WMO) standard recommends estimating climatological normals as the average of observations over a 30-year period. This approach may lead to strongly biased normals in a changing climate.”

Rigal et al., Estimating daily climatological normals in a changing climate, Climate Dynamics, December 15, 2018.

https://hal.archives-ouvertes.fr/hal-01980565/document

Non-stationarity underestimates extreme precipitation by as much as 60 percent… “Extreme climatic events are growing more severe and frequent, calling into question how prepared our infrastructure is to deal with these changes. Current infrastructure design is primarily based on precipitation Intensity-Duration-Frequency (IDF) curves with the so-called stationary assumption, meaning extremes will not vary significantly over time. However, climate change is expected to alter climatic extremes, a concept termed nonstationarity. Here we show that given nonstationarity, current IDF curves can substantially underestimate precipitation extremes and thus, they may not be suitable for infrastructure design in a changing climate. We show that a stationary climate assumption may lead to underestimation of extreme precipitation by as much as 60%, which increases the flood risk and failure risk in infrastructure systems.”

Cheng and AghaKouchak, Nonstationary Precipitation Intensity-Duration-Frequency Curves for Infrastructure Design in a Changing Climate, Nature Scientific Reports, November 18, 2014.

https://www.nature.com/articles/srep07093

END NON-STATIONARITY REFERENCES

Extreme rainfall increases nonlinearly with about 13% of the globe and almost 25% of the tropics seeing a 14% increase in rainfall volume per degree C, instead of the normal 7% increase per degree… “Daily precipitation extremes are projected to intensify with increasing moisture under global warming following the Clausius-Clapeyron (CC) relationship at about 7%/◦ C . However, this increase is not spatially homogeneous. Projections in individual models exhibit regions with substantially larger increases than expected from the CC scaling. Here, we leverage theory and observations of the form of the precipitation probability distribution to substantially improve intermodel agreement in the medium to high precipitation intensity regime, and to interpret projected changes in frequency in the Coupled Model Intercomparison Project Phase 6. Besides particular regions where models consistently display super-CC behavior, we find substantial occurrence of super-CC behavior within a given latitude band when the multi-model average does not require that the models agree point-wise on location within that band. About 13% of the globe and almost 25% of the tropics (30% for tropical land) display increases exceeding 2CC. Over 40% of tropical land points exceed 1.5CC. Risk-ratio analysis shows that even small increases above CC scaling can have disproportionately large effects in the frequency of the most extreme events. Risk due to regional enhancement of precipitation scale increase by dynamical effects must thus be included in vulnerability assessment even if locations are imprecise.”

Villalobos and Neelin, Regionally high risk increase for precipitation extreme events under global warming, Nature Scientific Reports, April 5, 2023.

https://www.nature.com/articles/s41598-023-32372-3

First Street Intensity Duration and Frequency evaluation of Rainfall for the Contiguous United States.

https://firststreet.org/research-lab/published-research/article-highlights-from-the-precipitation-problem/

BEGIN UNDERSTATE MODELING REFERENCES

Models understate risks of nonlinearly rapid responses… Abstract, ” Climate response metrics are used to quantify the Earth’s climate response to anthropogenic changes of atmospheric CO2. Equilibrium climate sensitivity (ECS) is one suchmetric thatmeasures the equilibrium response to CO2 doubling. However, both in their estimation and their usage, such metrics make assumptions on the linearity of climate response, although it is known that, especially for larger forcing levels, response can be nonlinear. Such nonlinear responses may become visible immediately in response to a larger perturbation, or may only become apparent after a long transient period. In this paper, we illustrate some potential problems and caveats when estimating ECS from transient simulations. We highlight ways that very slow time scales may lead to poor estimation of ECS even if there is seemingly good fit to linear response over moderate time scales. Moreover, such slow processes might lead to late abrupt responses (late tipping points) associated with a system’s nonlinearities.We illustrate these ideas using simulations on a global energy balance model with dynamic albedo. We also discuss the implications for estimating ECS for global climate models, highlighting that it is likely to remain difficult to make definitive statements about the simulation times needed to reach an equilibrium.”

Press Release – University of Copenhagen –

https://www.eurekalert.org/news-releases/975474?email=0b55283058592ed4dceac92195387712b02adcbf&emaila=cc81276cea4b59c03ae6538e79266b97&emailb=a012cf3201bff69aafe84b43c276e17e96223dcb0d700bbf98a7915b2d3c4768&utm_source=Sailthru&utm_medium=email&utm_campaign=1.4.23%20EM%20The%20Hill%20-%20Equilibrium/Sustainability

Bastiaansen et al., Climate response and sensitivity, time scales and late tipping points, Proceedings of the Royal Society A, January 4, 2023.

https://royalsocietypublishing.org/doi/10.1098/rspa.2022.0483

Tipping and Cascading climate events (extreme changes) are understated in modeling… “The Earth system and the human system are intrinsically linked. Anthropogenic greenhouse gas emissions have led to the climate crisis, which is causing unprecedented extreme events and could trigger Earth system tipping elements. Physical and social forces can lead to tipping points and cascading effects via feedbacks and telecoupling, but the current generation of climate-economy models do not generally take account of these interactions and feedbacks. Here, we show the importance of the interplay between human societies and Earth systems in creating tipping points and cascading effects and the way they in turn affect sustainability and security. The lack of modeling of these links can lead to an underestimation of climate and societal risks as well as how societal tipping points can be harnessed to moderate physical impacts. This calls for the systematic development of models for a better integration and understanding of Earth and human systems at different spatial and temporal scales, specifically those that enable decision-making to reduce the likelihood of crossing local or global tipping points.”

Franzke et al., Perspectives on tipping points in integrated models of the natural and human Earth system: cascading effects and telecoupling, Environmental Research Letters, January 5, 2022.

https://iopscience.iop.org/article/10.1088/1748-9326/ac42fd/pdf

Science 2020, Climate change –caused or enhanced extreme event attribution frequently underestimates the probability of unprecedented extremes… “Previously published results based on a 1961–2005 attribution period frequently underestimate the influence of global warming on the probability of unprecedented extremes… The underestimation is reflected in discrepancies between probabilities predicted during the attribution period and frequencies observed during the out-of-sample verification period. These discrepancies are most explained by increases in climate forcing between the attribution and verification periods, suggesting that 21st-century global warming has substantially increased the probability of unprecedented hot and wet events.”

Diffenbaugh, Verification of extreme event attribution, Using out-of-sample observations to assess changes in probabilities of unprecedented events, Science Advances, March 18, 2020. https://www.science.org/doi/epdf/10.1126/sciadv.aay2368

The majority of models underestimated the impacts of the 2003 European heat wave meaning “societal risks from future extreme events may be greater than previously thought”… “Global impact models represent process-level understanding of how natural and human systems may be affected by climate change. Their projections are used in integrated assessments of climate change. Here we test, for the first time, systematically across many important systems, how well such impact models capture the impacts of extreme climate conditions. Using the 2003 European heat wave and drought as a historical analogue for comparable events in the future, we find that a majority of models underestimate the extremeness of impacts in important sectors such as agriculture, terrestrial ecosystems, and heat-related human mortality, while impacts on water resources and hydropower are overestimated in some river basins; and the spread across models is often large. This has important implications for economic assessments of climate change impacts that rely on these models. It also means that societal risks from future extreme events may be greater than previously thought.”

Schewe, State-of-the-art global models underestimate impacts from climate extremes, Nature Communications, March 1, 2019.

https://www.nature.com/articles/s41467-019-08745-6

Models understate… “The Human System has become strongly dominant within the Earth System in many different ways. However, in current models that explore the future of humanity and environment, and guide policy, key Human System variables, such as demographics, inequality, economic growth, and migration, are not coupled with the Earth System but are instead driven by exogenous estimates such as United Nations (UN) population projections. This makes the models likely to miss important feedbacks in the real Earth–Human system that may result in unexpected outcomes requiring very different policy interventions. The importance of humanity’s sustainability challenges calls for collaboration of natural and social scientists to develop coupled Earth–Human system models for devising effective science-based policies and measures.”

Motesharrei et al., Modeling sustainability: population, inequality, consumption, and bidirectional coupling of Earth and Human Systems, National Science Review, December 11, 2016.

https://academic.oup.com/nsr/article/3/4/470/2669331

Modeling Abrupt Change Uncertainties Large… Given the huge complexity of comprehensive process-based climate models and the non-linearity and regional peculiarities of the processes involved, the uncertainties associated with the possible future occurrence of abrupt shifts are large and not well quantified.

Bethiany et al., Beyond bifurcation, using complex models to understand and predict abrupt climate change, Dynamics and Statistics of the Climate System, November 19, 2016.

https://academic.oup.com/climatesystem/article-pdf/1/1/dzw004/9629859/dzw004.pdf

END UNDERSTATED MODELING REFERENCES

Nonlinearly increasing energy with warming… Enthalpy of moist air – a little warming creates nonlinearly more energy in the environment because of the enthalpy of water vapor.https://www.researchgate.net/figure/Entropy-s-AV-panel-a-and-enthalpy-h-AV-panel-b-of-humid-air-in-equilibrium-with_fig14_314331082

Non-stationarity underestimates extreme precipitation by as much as 60 percent… “Extreme climatic events are growing more severe and frequent, calling into question how prepared our infrastructure is to deal with these changes. Current infrastructure design is primarily based on precipitation Intensity-Duration-Frequency (IDF) curves with the so-called stationary assumption, meaning extremes will not vary significantly over time. However, climate change is expected to alter climatic extremes, a concept termed nonstationarity. Here we show that given nonstationarity, current IDF curves can substantially underestimate precipitation extremes and thus, they may not be suitable for infrastructure design in a changing climate. We show that a stationary climate assumption may lead to underestimation of extreme precipitation by as much as 60%, which increases the flood risk and failure risk in infrastructure systems.”

Cheng and AghaKouchak, Nonstationary Precipitation Intensity-Duration-Frequency Curves for Infrastructure Design in a Changing Climate, Nature Scientific Reports, November 18, 2014.

https://www.nature.com/articles/srep07093

Atlas 14 in Leander, Texas… The Leander Flood Study shows Atlas 14 increases runoff flows through the study area by an average of 48 to 60 percent because of increasing rainfall intensity and frequency using NOAA’s Atlas 14 (2018), and when impervious cover changes were included the increase was 50 to 100 percent. It is important to note that Atlas 14 is understated because of non-stationary data.

(Report not available on the internet) City of Leander H&H Technical Report_20210913.pdf